Wild-Places: A Large-Scale Dataset for Lidar Place Recognition in Unstructured Natural Environments

Joshua Knights*,1,2

Kavisha Vidanapathirana*,1,2

Milad Ramezani1

Sridha Sridharan2

Clinton Fookes2

Peyman Moghadam1,2

Proceeding of ICRA2023

* Equal Contribution

1Robotics and Autonomous Systems Group, DATA61, CSIRO, Australia. E-mails: firstname.lastname@data61.csiro.au

2School of Electrical Engineering and Robotics, Queensland University of Technology (QUT), Australia. E-mails: {s.sridharan,c.fookes}@qut.edu.au

Abstract | Paper | Citation | GitHub | Images | Dataset Information | Benchmarking | Download

1. Abstract

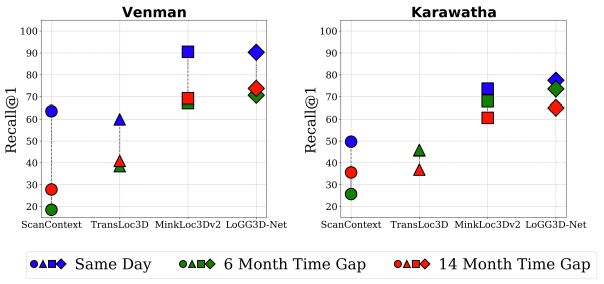

Many existing datasets for lidar place recognition are solely representative of structured urban environments, and have recently been saturated in performance by deep learning based approaches. Natural and unstructured environments present many additional challenges for the tasks of long-term localisation but these environments are not represented in currently available datasets. To address this we introduce Wild-Places, a challenging large-scale dataset for lidar place recognition in unstructured, natural environments. Wild-Places contains eight lidar sequences collected with a handheld sensor payload over the course of fourteen months, containing a total of 63K undistorted lidar submaps along with accurate 6DoF ground truth. Our dataset contains multiple revisits both within and between sequences, allowing for both intra-sequence (i.e. loop closure detection) and inter-sequence (i.e. re-localisation) place recognition. We also benchmark several state-of-the-art approaches to demonstrate the challenges that this dataset introduces, particularly the case of long-term place recognition due to natural environments changing over time.

2. Paper

3. Citation

If you find this paper helpful for your research, please cite our paper using the following reference: Joshua Knights, Kavisha Vidanapathirana, Milad Ramezani, Sridha Sridharan, Clinton Fookes, and Peyman Moghadam, "Wild-Places: A Large-Scale Dataset for Lidar Place Recognition in Unstructured Natural Environments." IEEE International Conference on Robotics and Automation (ICRA) (2023).

4. GitHub

We provide training and evaluation code for our dataset in our GitHub repository as well as example script for loading the dataset and forming the training and testing splits outlined in our paper.

5. Images

5.1 Sequences & Environments

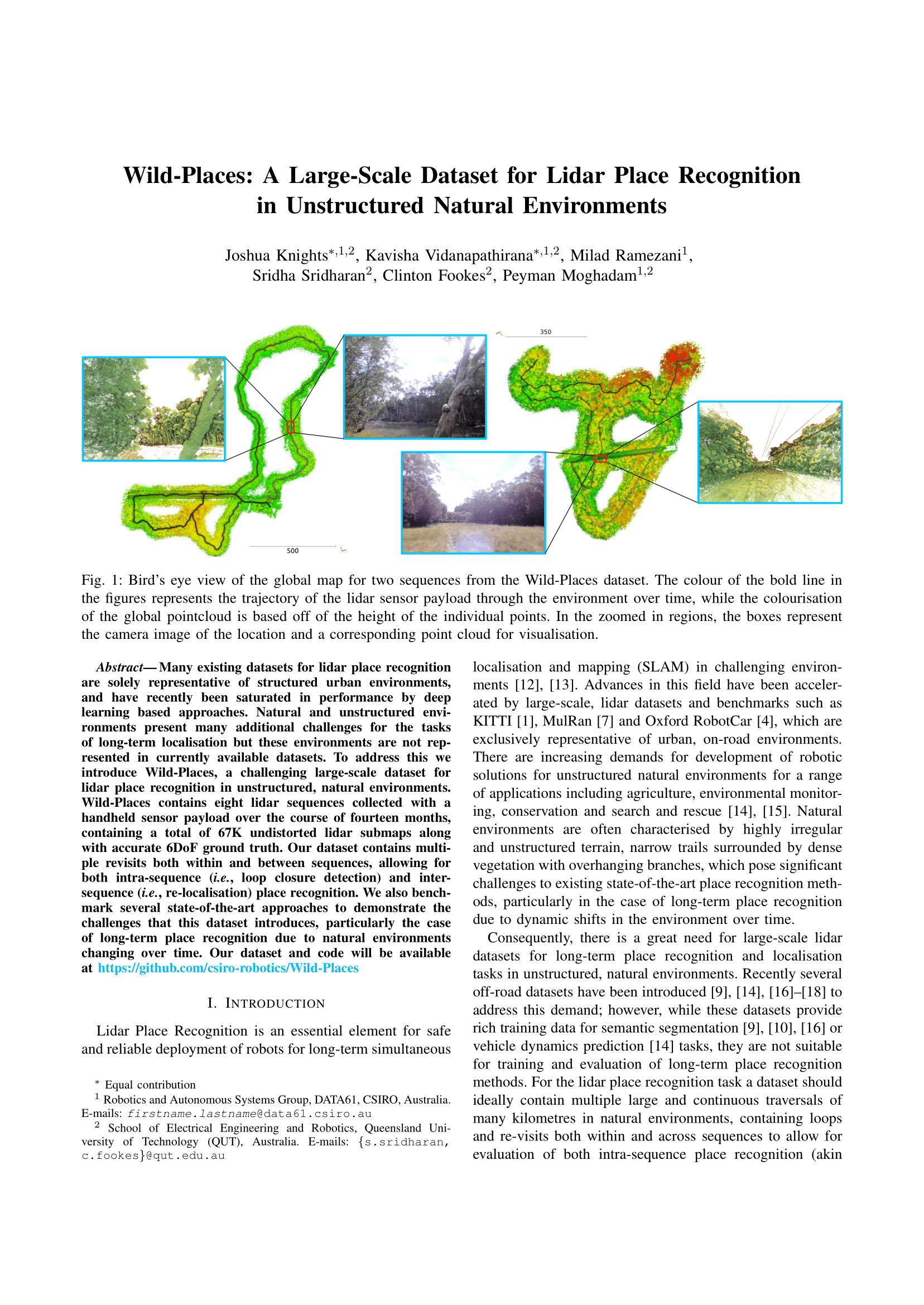

Environment and sequence visualisation. The top row shows the trajectory of two sequences - V-03 and K-02 - overlaid on a satellite image of their respective environments. The bottom row shows the trajectory of sequences 01, 02, 03 & 04 on each environment from top to bottom, respectively.

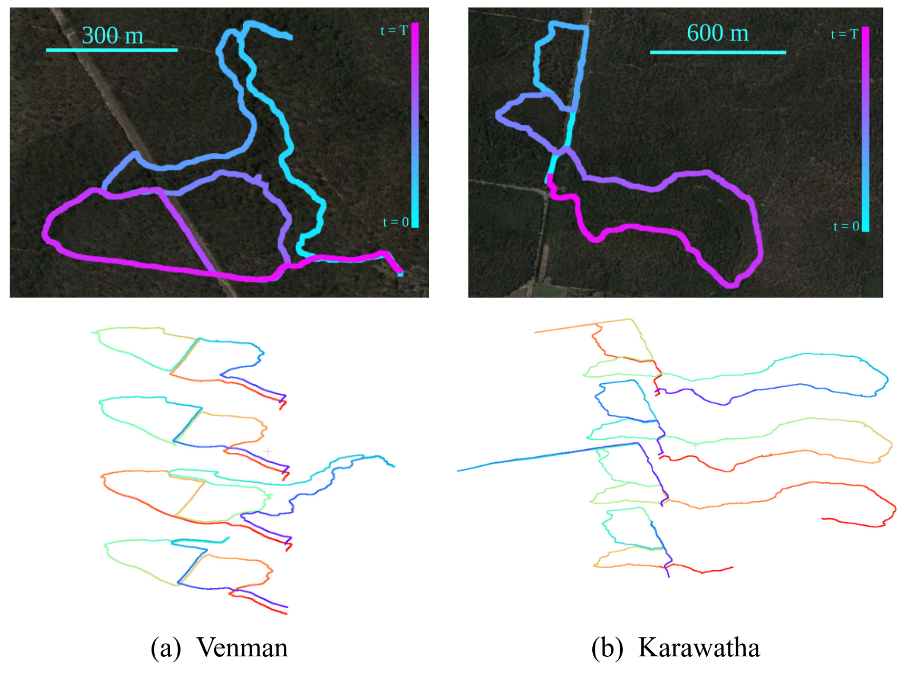

5.2 Global Map

Visualisation of the Global Map. We use Wildcat SLAM to create an undistorted global point cloud map for each sequence, from which we extract the submaps used during training and evaluation.

5.3 Diversity

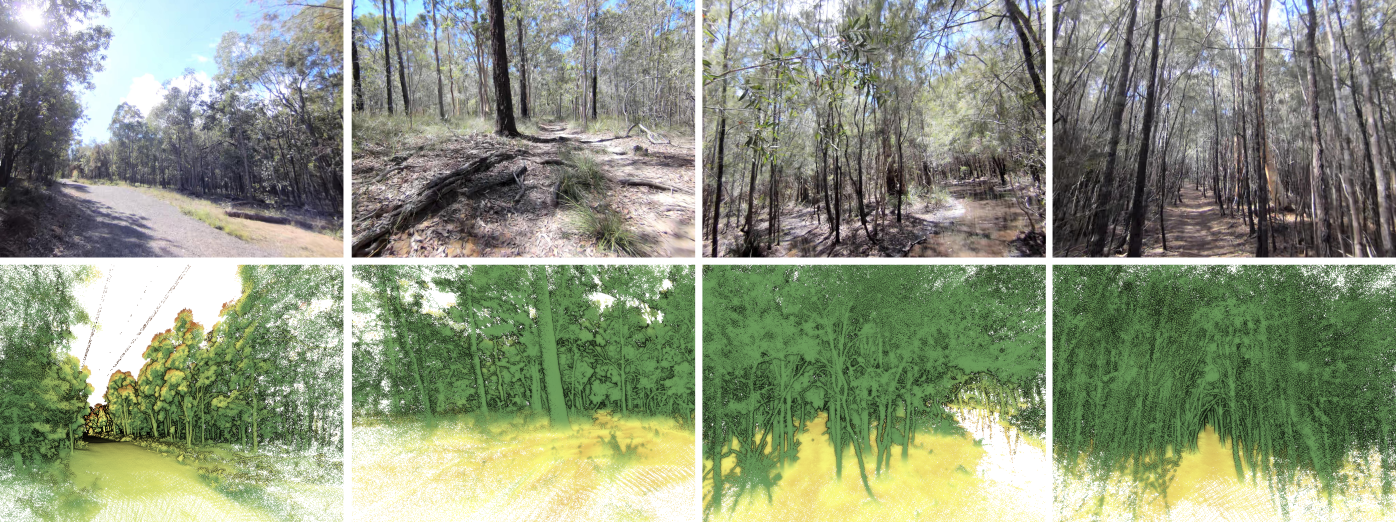

Visualisation of the diversity in environments present in the Wild-Places dataset. The top row shows RGB images from the front camera of the sensor payload, while the bottom row shows the point cloud visualisation corresponding to the camera pose in the global point cloud.

6. Dataset Information

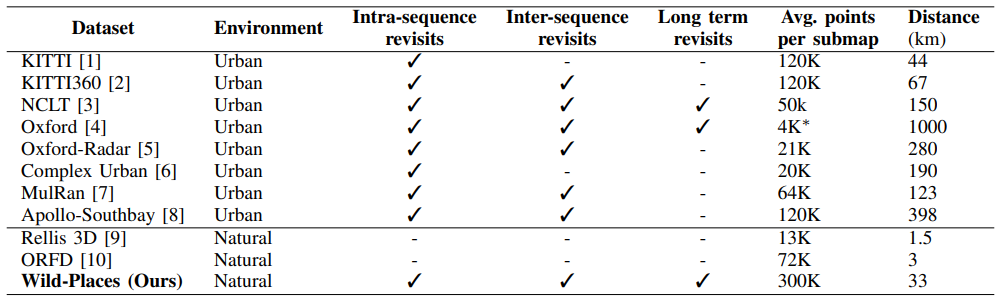

6.1 Comparison

Comparison of public lidar datasets. The top half of the table shows the most popular lidar datasets used for large-scale localisation evaluation. The bottom half shows public lidar datasets which contain only natural and unstructured environments. Wild-Places is the only dataset that satisfies both of these criteria. We define long-term revisits here as a time gap greater than 1 year. * Post-processed variation introduced in PointNetVLAD

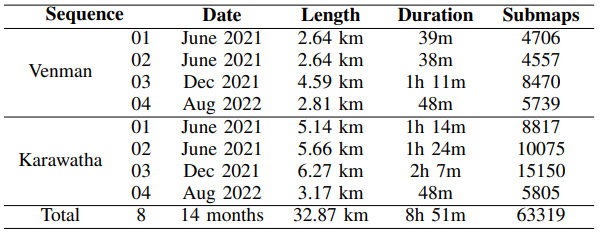

6.2 Sequences

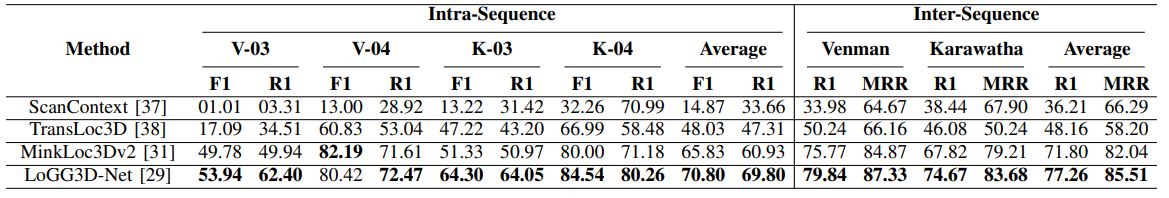

7. Benchmarking

8. Download

8.1 Checkpoint Download

The links in the above table will allow you to download checkpoints for our trained models on the TransLoc3D, MinkLoc3Dv2 and LoGG3D-Net architectures trained in the paper associated with this dataset release.8.2 Dataset Download

Our dataset can be downloaded through The CSIRO Data Access Portal. Detailed instructions for dataset, and downloading the dataset can be found in the Supplementary Material file in this page and CSIRO's data access portal page.8.3 License

We release this dataset under a Creative Commons Attribution Noncommercial-Share Alike 4.0 Licence